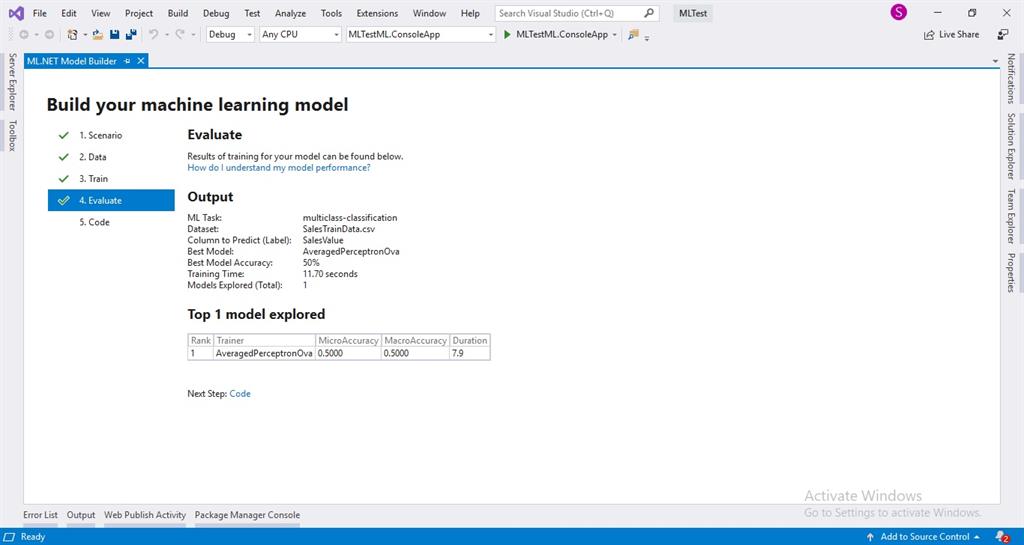

You can then check your model against this data, correlating the results with your predicted outputs. Once you’ve cleaned up your training data set, segregate some of the data to use as a test data set. Model evaluation is probably the most important part of the training process, and you need to prepare your training data before you do this.

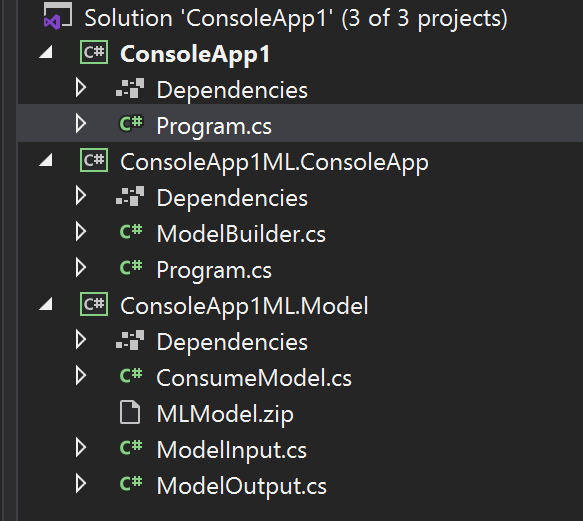

Once trained, save your model as a binary, loading it into an ITransformer object before calling it from CreatePredictionEngine.Predict(). Once that’s run, you then need to evaluate the results and tune your model, iterating until you’re happy with its performance.

You build a ML pipeline from a selection of extension methods that implement statistical and machine learning algorithms, load the data, and use Fit() to train your model. Training data is initially loaded into an IDataView object and then used to train your chosen ML algorithm. It handles both training and inference, so you can use it to work with both training and live data, using an iterative process to fine tune your model and improve accuracy. Net code, declaring them in your headers with using statements.

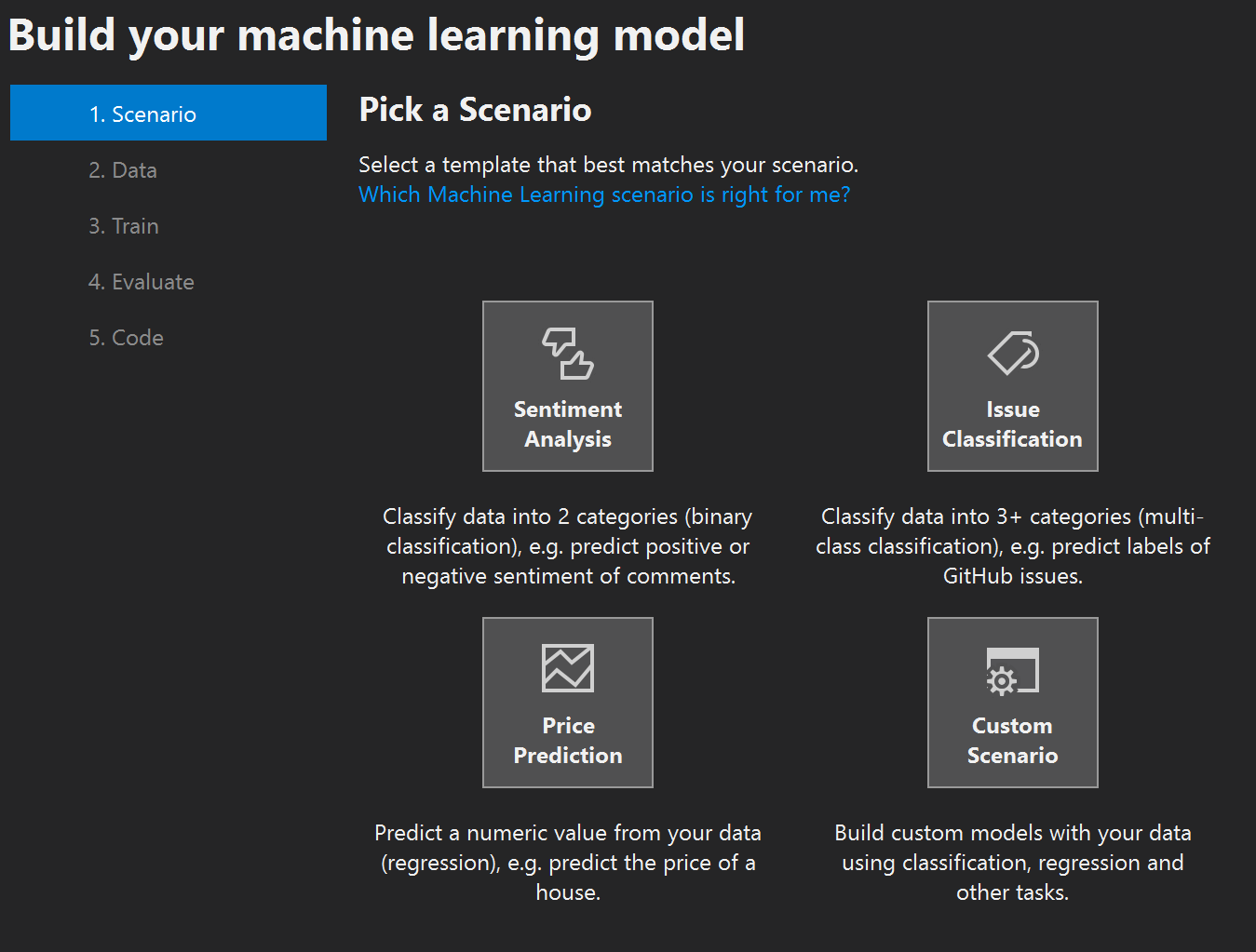

Ml.net model builder install#

First download and install the ML.Net packages, and then add the appropriate libraries to your. By supporting as wide a selection of frameworks as possible, it gives you the option to pick and choose the ML models that are closest to your needs, fine tuning them to fit.

It’s extensible, so it works with not only Microsoft’s own ML tooling but also with other frameworks such as Google’s TensorFlow and the ONNX cross-platform model export technology.

Ml.net model builder mac#

Net Standard, running on Windows, Mac OS, and Linux systems. It’s Microsoft’s open source, cross-platform machine learning tool for. APIs like Microsoft’s Azure Cognitive Services are one way to do this, but not every application has a permanent connection to Azure, so it’s important to have ML tools that build into our everyday development environments and tools. Research is a good thing, but it’s far better to put the results of that research into action and into the hands of developers. Far more exciting is the democratization of machine learning. If you’re an AI researcher it’s an exciting time, with new discoveries and new tools arriving weekly.īut that’s only part of the story. We’ve gone from the cold depths of an AI winter to an explosion of new neural networks and models, building on the hyperscale compute capabilities of the cloud and on the requirements of big data services. For example, Stochastic Dual Coordinated Ascent which we used in this article is available as Sdca (for regression), SdcaNonCalibrated and SdcaLogisticRegression (for binary classification), and SdcaNonCalibrated and SdcaMaximumEntropy (for multi-classification).Machine learning is an important tool for modern application development. There are multiple training algorithms for every kind of ML.NET task, such as trainers which can be found in their corresponding trainer catalogs. The seed parameter is used internally by splitters and by some trainers to give the model training a deterministic behavior (this is very useful for unit tests). Many of them are part of additional nuget packages. Inside this catalog, we can find all the trainers, data loaders, data transformers and predictors which we can use for a large variety of tasks like: regression, classification, anomaly detection, recommendation, clustering, forecasting, image classification and object detection. If we don't have such column as Label in our dataset, we have to annotate the target field like it follows (of course, for another problem, we may have another target feature we need to annotate): īefore proceeding to build the training pipeline, let me introduce the MLContext catalog container.

Ml.net model builder series#

At the end of the first part of the series we had a preprocessing pipeline ready to load data and concatenate the features selected for the training into one special feature called Features, and a target feature named Label serving as a category where the selected feature classifies.

0 kommentar(er)

0 kommentar(er)